AI Agents 101: What They Are — and Why PMs Should Care

How AI is evolving, what tools are leading the shift, and why PMs need a new mindset.

Hey, Dmytro here — welcome to The Atomic Product (free).

Every week, I share practical ideas, tools, and real-world lessons to help you grow as a product thinker and builder.

If you're new here, here are a few past posts you might find useful:

Hit subscribe if not on the list yet— and let’s roll 👇

Not long ago, a colleague of mine admitted:

“I feel like I’m falling behind. Everything’s changing so fast. I just got used to ChatGPT — and now everyone’s talking about agents, orchestration, RAG, MCP... I can’t even keep up with the terminology.”

And he’s not alone.

A lot of smart, experienced professionals are realizing they can’t keep pace with how fast AI is evolving. The industry isn’t just moving fast — it’s accelerating.

And it’s no longer hype. This is a real shift.

AI agents have arrived. They don’t just help you — they act, make decisions, remember, trigger other services, and... change the very logic of how digital products work.

This article is my attempt to help people like my colleague (and maybe you too) cut through the noise — and stay ahead.

We’ll walk through:

– the steps we’ve already taken in AI (from LLMs to agents — and beyond)

– how agents differ from workflows

– what tools like Zapier, Make.com, and n8n are really bringing to the table

– what’s already working in the wild — and where all of this is heading

This isn’t an “Intro to AI” (that’s coming in a separate piece).

It’s a practical guide to help you stay grounded in a world that’s moving fast.

Let’s dive in.

How It Evolved: From LLMs to Agents (and Beyond)

When ChatGPT first launched, it felt like a smart calculator for words. You type — it replies. Sometimes witty, sometimes dry, but almost always within a “question–answer” format.

But things started to shift.

At some point, you stop asking “Can you summarize this article?” and start saying: “Read this doc, find key insights, look for similar case studies — and turn it into slides.”

And it works. No script. No hand-holding.

That’s when language models started quietly morphing into agents.

If we look back, the path has been surprisingly evolutionary. Here’s how it unfolded — in plain English:

Just LLM

Like a talkative companion. You ask — it answers.

📌 Example: “Write me a presentation outline” → you get 5 bullet points. That’s it. No docs, no memory, no tools.LLM + Documents

Now it can read files and summarize content.

📌 Example: “Summarize this deck” → and it gives you a neat recap, like a student taking notes.LLM + Tools

It can now use external tools — search, math, APIs.

📌 Example: “Find the lowest price for this product on Amazon and eBay” → and it actually does it.LLM + Retrieval (RAG)

Instead of Googling, it looks into your internal docs and vector databases.

📌 Example: “Find all mentions of client X over the last 3 months” → and you get precise snippets from internal data.Memory kicks in

It doesn’t forget what you said yesterday.

📌 Example: “Continue the strategy we discussed last week” → and it actually does, instead of starting from scratch.Agents emerge

This isn’t just reaction anymore — it’s initiative.

📌 Example: “Plan my week” → the agent checks your calendar, sets priorities, writes emails, and sends you a summary. No clicks required.Agent orchestration

One agent is good. A team of agents is better.

📌 Example: one drafts a blog post, another edits it, a third saves it to Notion. All hands-off.MCP (Model Context Protocol)

Here’s the real shift. Agents can now “ask” what tools are available and how to use them.

📌 Example: “What can I do with Airtable?” → and it gets back a list of commands, formats, and limitations. No hardcoding, just live context.

Put simply: you used to build workflows step by step — like old-school PowerPoint animations. Now the agent builds its own path, selects the tools, and figures out how to reach the goal.

Workflow vs Agent: What’s the Difference — and Why It Matters

Let’s start with a scene. You wake up, and someone has already:

– sent your morning newsletter,

– rescheduled useless meetings,

– built your to-do list,

– and even signed your kid up for chess club.

You think: “Is this magic?” Nope. That’s an agent.

And this is where definitions start to matter. Because a lot of what people call “AI agents” today... is actually just automation.

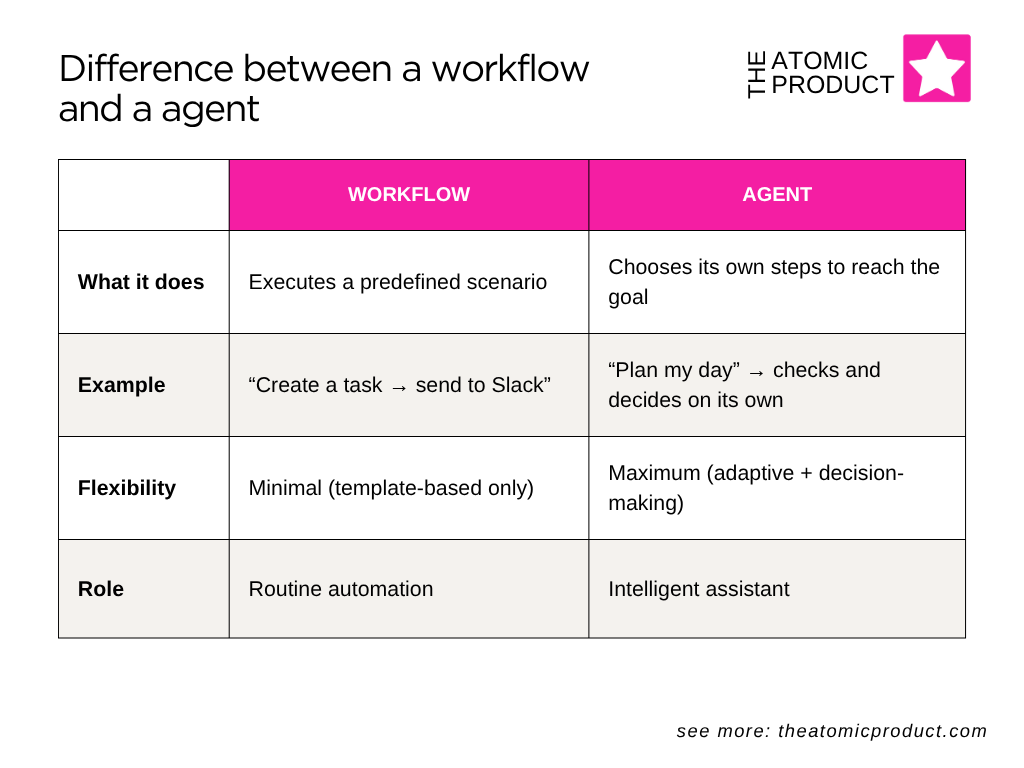

So what’s the real difference between a workflow and an agent?

In plain terms:

A workflow is like an Excel macro — useful, but rigid.

An agent is like a smart assistant — you say “make it good,” and it figures out what that means.

How We Got Here: A Hands-On History

The difference between workflows and agents is easiest to grasp when you look at the tools we’ve used over time.

It started with Zapier — dry, structured, but effective. No visuals, just forms and logic chains. I still remember setting up “new lead → welcome email → CRM entry” and feeling like I’d just built a mini-robot. Even though it was just a sequence of dropdowns.

Then came Make.com — and everything got visual. Arrows, blocks, filters, loops. Suddenly it felt like system design, not just task automation. I remember thinking, “Why didn’t Zapier ever make it this intuitive?”

And then n8n entered the scene with a bold new question: “What if we stopped scripting every step — and just gave the system a goal?” Now you can say, “Plan my week,” and it decides what steps to take, which data to pull, and where to send it. It’s no longer automation. It’s initiative.

To sum it up:

– Zapier is a macro

– Make is a visual editor

– n8n is already becoming a digital assistant

So who’s really ready for the AI agent era?

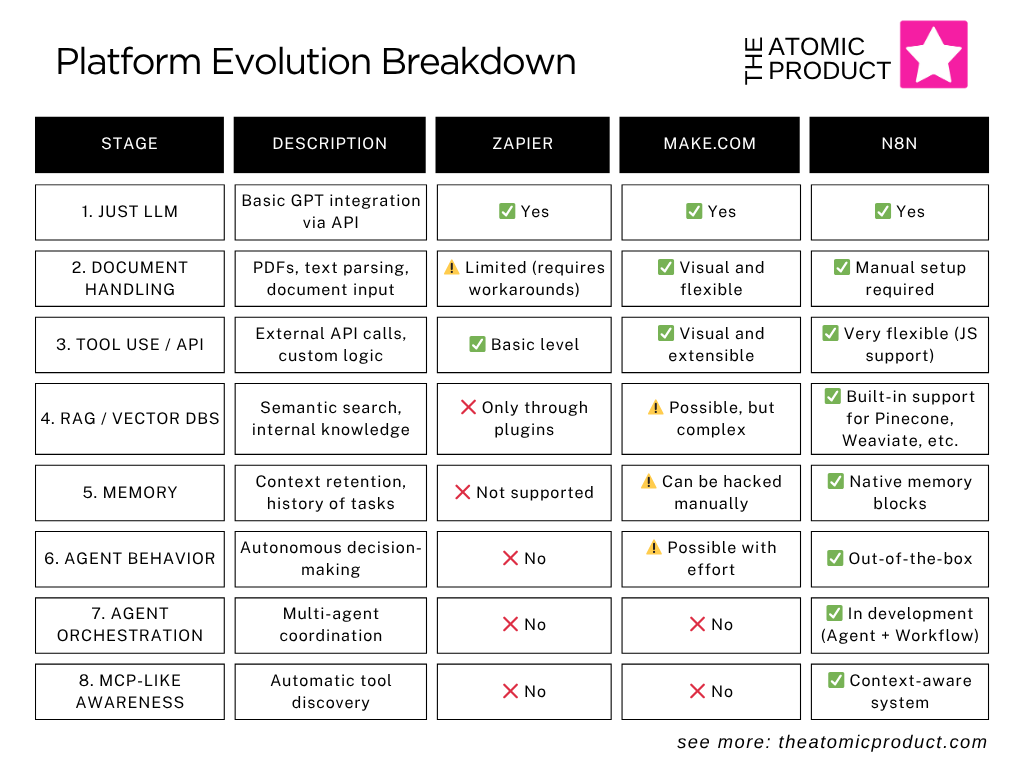

Let’s take a closer look at how three popular automation platforms — Zapier, Make.com, and n8n — stack up in a world that’s moving from rule-based flows to true AI agents. We’ll walk through key capabilities, from basic GPT integration to memory, RAG, orchestration, and agent behavior.

What does this mean in practice?

Zapier is great for “If A, then B” logic. You can hook up GPT, but everything else — memory, context, decisions — is manual. It’s a template machine, not a smart system.

Make.com gives you serious flexibility. You can build complex flows, use OpenAI, parse documents. But in the end, it’s still a workflow: it does what you told it to do, nothing more.

n8n plays in a different league. It has a built-in AI agent, memory, vector search, JavaScript support, and even something close to an MCP system. It doesn’t just follow orders — it makes its own decisions about what tools to use, and in what sequence. That’s not a macro or a flow. That’s a digital teammate.

What an AI Agent Is Made Of: Architecture, Not Magic

An AI agent isn’t just a “supercharged ChatGPT.”

It’s a modular system — a composition of tools that work together to receive a task, process it, make decisions, and take action.

In practice, you don’t use “one big assistant.” You build a stack, where each component plays its role. Here are the core pieces:

1. The Brain (LLM)

This is the engine that understands text, interprets commands, and generates responses.

Depending on the task, you can use different models:

ChatGPT – a universal conversationalist, great for general reasoning and content generation.

Claude – softer tone, excellent at analyzing documents and rewriting.

Perplexity – great for pulling info from external sources (web search).

Mistral, LLaMA, GPT-NeoX – open-source options for self-hosted setups.

The LLM is the heart of your agent — but without memory, tools, or contextual awareness, it’s still “just” a smart chatbot.

2. Memory

LLMs operate on a “here and now” basis. Without memory, they forget what happened 10 steps ago.

That’s where memory systems come in:

Short-term memory (context window) – built into the LLM but limited (a few thousand tokens).

Vector databases (e.g. Pinecone, Weaviate, Qdrant) – allow you to store and semantically retrieve chunks of information.

Long-term databases (PostgreSQL with JSON, Supabase, etc.) – good for structured storage of sessions and user data.

Memory makes agents smarter. They can now “remember” previous tasks, conversations, or user preferences — and make better decisions as a result.

3. Tools (APIs, Plugins, External Systems)

LLMs can plan — but they can’t act.

They can’t send an email, create a task, or check today’s exchange rate.

That’s why we add tools — external APIs and plugins:

Google Calendar API – schedule meetings, fetch availability.

HubSpot API – create a contact, update a deal.

Jira / Notion / Slack / GitHub – your internal stack, accessible via API.

You typically connect these tools via middleware like n8n, LangChain agents, Make.com, or your own backend.

To use tools, the agent needs to know what’s available — and that’s where context comes in.

4. Context & MCP (Model Context Protocol)

You can’t expect the agent to magically “know” what tools exist.

You either hardcode them or provide a dynamic description — that’s what MCP does. It describes:

Available tools

Input/output formats

How to call them

What actions are permitted

For example, instead of hand-coding “Use this POST to Airtable,” you just define a JSON or YAML schema. The agent reads it and uses the tool correctly.

The MCP approach is scalable: Add a new tool → Describe it → The agent knows how to use it.

5. Execution Environment (Orchestration)

Finally, you need something that runs all of this.

Where does the LLM get its task?

How does it decide what to do next?

Who manages the chain of actions?

Options include:

LangChain / CrewAI / AutoGen – for building complex agent logic

n8n / Make.com – for visual orchestration with embedded LLMs

Custom backend (FastAPI, Express.js) – when you need full control

This is how modern AI agents are built — not as one big brain, but as systems that combine memory, reasoning, tools, and coordination.

And now that we’ve broken down how agents work under the hood — let’s take a step forward.

What trends are shaping the future of this technology?

And more importantly — what does that mean for us as product managers, developers, and team leads?

What’s Next? 3 Trends Reshaping the Game

While some teams are just experimenting with agents for small tasks, others are already building workflows, roles, and even full departments around them.

Here are three shifts every future-proof PM should be aware of.

1. Agent Teams: When One Isn’t Enough

It used to be simple: one agent = one task.

But what happens when there are ten tasks — and they depend on each other?

That’s where agent orchestration comes in: one agent doesn’t do everything but delegates to others.

Real-life example:

One agent retrieves the documents

Another drafts the content

A third edits and publishes it

A fourth monitors performance and triggers follow-up

You used to need a human for this. Now it’s a YAML file.

Frameworks like CrewAI, AutoGen, and LangGraph make this multi-agent setup surprisingly real and accessible.

2. MCP: When Tools Describe Themselves

Even a smart agent needs to know what tools it can use.

Enter Model Context Protocol (MCP) — not a formal standard, but an architectural pattern where tools self-describe what they can do.

Example:

An agent connects to Airtable and gets a list of available actions:

"I support GET, POST, filtering, here are my parameters."

This speeds up development — the agent picks the right tool based on context.

But here's the catch: MCP is a trust contract. If a tool lies or suggests a dangerous command, the agent might execute it blindly.

That’s why AI agent security, governance, and trust frameworks are quickly gaining traction.

3. Specialization: Agents with Character

Generic GPTs are like Swiss Army knives: decent at everything, masters of none.

But in real-world practice, specialized agents win.

Examples:

A finance agent who understands reporting formats

A legal agent trained to review contracts

A marketing agent focused on e-commerce analytics

You don’t train them from scratch.

You just set the role:

"You’re a copywriter. Write like Brand X. Avoid jargon. Target C-levels."

And it works.

These role-based agents are already being embedded in CRMs, analytics tools, and customer support platforms.

AI agents are no longer just a “cool feature.”

They’re becoming a new interface layer, where you don’t click buttons — you set goals.

Final Thought

When ChatGPT first showed up, the title “AI Product Manager” started making noise — but it didn’t mean much.

Everyone was talking about the “art of prompting” and treating it like a new profession.

In reality, ChatGPT already understood most prompts just fine. The so-called “prompt engineers” felt more like hype merchants than real specialists.

That started to shift with the rise of AI workflows.

And now — with agents — we’re in a whole new territory.

You can’t just “ask the model.”

You need to understand how the whole system works — how data flows, where context is stored, how tools connect behind the scenes.

And that changes the PM role, too.

You're no longer just the person saying “let’s add ChatGPT to the product.”

You’re designing how the system behaves: how an agent makes decisions, how it learns, how it interacts with others.

You’re not writing code — but you need to understand the architecture.

The real AI PM isn’t a “prompt whisperer.”

They’re a behavior architect — someone who sets the goals, boundaries, and logic for a new kind of digital entity.

That’s what makes AI Product Management real.

No magic. No hype.

Just a new skill set — a little technical, a lot logical, and 100% hands-on.

Thanks for reading The Atomic Product.

Stay curious and stay sharp

— Dmytro