LLM, ML, AI: What’s the Difference — and Why It Matters for Product Managers?

Let’s get your AI knowledge up to speed. Cut the fluff. Use AI where it matters.

Hey, Dmytro here — welcome to Atomic Product (free edition).

Every week, I share practical ideas, tools, and real-world lessons to help you grow as a product thinker and builder.

If you're new here, here are a few past posts you might find useful:

Hit subscribe if not on the list yet— and let’s roll 👇

Seems like every other startup these days calls itself an “AI-powered platform for smarter workflows.”

And every other PM writes in their resume: “building with AI.”

But let’s be honest — most people are just winging it.

– What’s ML vs. Generative AI?

– How is LLM different from plain old AI?

– And most importantly — what does this all mean for you as a product manager?

When everything is becoming “AI-powered,” it’s crucial to know what’s actually under the hood.

Not for the buzzwords — but so you can:

– avoid falling for hype and the promise of magic buttons

– understand what the tools can (and can’t) do

– and, finally — integrate AI into real product features, not just add shiny “Generate” buttons

This article is your short, practical breakdown of key terms — with real-life examples where PMs are already putting them to work.

AI, ML, LLM, Generative AI — what’s the actual difference?

If you’re confused by all the acronyms — don’t worry.

Even some so-called “AI specialists” throw these terms around without knowing where one ends and the next begins.

Let’s break it down. In plain English — with product examples.

AI (Artificial Intelligence) is the big umbrella.

Anything that lets a machine “think,” make decisions, or draw conclusions — things only humans used to do — now counts as AI.

If your inbox automatically sorts emails into Important vs. Promotions — that’s AI.

📌 Examples: Gmail Smart Reply, Google Translate, Tesla Autopilot

ML (Machine Learning) is how AI is usually built.

Instead of writing all the rules manually, we give the model a ton of examples — and it learns to recognize patterns.

For instance, a bank’s fraud detection system studies thousands of shady transactions — and then catches similar ones on its own.

📌 Examples: Amazon Recommendations, LinkedIn “People You May Know,” Grammarly

Deep Learning is a turbocharged subset of ML.

Here, models go deep — literally. They use neural networks with dozens or even hundreds of layers. This makes them great at handling complex inputs like text, images, speech, or video.

If ML is like a high-schooler, Deep Learning is a neuroscience grad.

📌 Examples: facial recognition, live translation, voice cloning

And yes — LLMs are built using Deep Learning. They’re just specialized for text.

LLMs (Large Language Models) are a specific type of model designed to work with language.

They’re trained on billions of words — so when they talk to you, it feels like chatting with a philosophy major.

They’re not pulling answers from a database. They generate them on the fly — word by word — based on probabilities. Sounds boring, works like magic.

📌 Examples: ChatGPT, Claude, Gemini, Mistral

Important to know:

LLMs aren’t the only game in town. There are SAMs (for visual data), VLMs (for text + image), MoE (mixture-of-expert models), and more.

But LLMs dominate most product use cases today: messaging, analysis, ideation, automation.

Most LLMs are built using transformers — a type of neural network architecture that’s great at handling context and meaning. You don’t need to go deep into it, but it’s good to know it exists.

Generative AI isn’t a model — it’s a capability.

If AI helps decide, Generative AI helps create.

Text, images, code, music — you give it a prompt, it gives you something new.

All LLMs are part of Generative AI, but not all Generative AI is based on LLMs.

📌 Examples:

— Text: ChatGPT, Jasper, Copy.ai

— Images: Midjourney, DALL·E

— Video: Runway, Pika

— Music: Suno, Udio

📍Quick recap:

• AI — the umbrella

• ML — the learning method

• Deep Learning — souped-up ML using neural nets

• LLM — language-focused models

• Generative AI — anything that creates, not just responds

If that made sense — great.

If your brain still feels a bit scrambled — don’t worry.

Next up, we’ll look at how this actually connects to your work as a product manager.

Why should PMs even care about these terms?

Simple:

AI is getting deeper into our products, features, and internal processes.

At first, it was enough to throw around “AI,” “ML,” or “LLM.” But the landscape keeps evolving — and so do the opportunities.

Now we’re dealing with the next layer: AI workflows, AI agents, RAG, memory, and more.

And these aren’t just buzzwords from conference slides. They’re practical tools that open new doors for product teams:

• Automate repetitive tasks (for your team or users)

• Rapidly build MVPs using pre-trained models

• Launch features that used to require 10× the time and budget

But to actually use these tools, you need to understand what’s behind them — at least at a basic level.

Not just “LLM is a big model,” but:

“Right — this means I can build a bot that reads my documents, understands the context, and pulls data from external APIs — not just answers chat prompts.”

That’s why it’s worth getting familiar with the layers. And that’s exactly what we’ll do next — walk through how a simple ChatGPT bot evolves into a full-blown AI agent.

Step by step. No magic required.

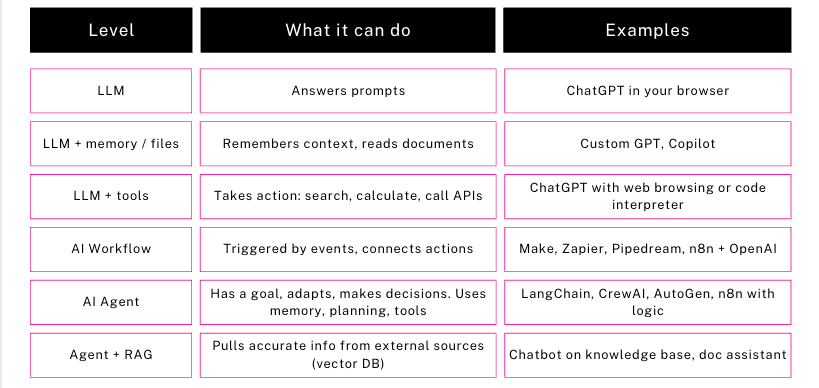

From ChatGPT to AI Agents: Step by Step 🔄

When people hear “AI in the product,” they still often picture ChatGPT — a chatbot you type into, and it replies.

But that’s just the starting point.

AI tech is evolving fast — and so are expectations for what we can actually build with it.

📌 From casual chatting → to advanced automation and decision-making.

To avoid repeating myself — I already broke down this evolution in detail in a previous article:

🔗 [AI Agents 101: What They Are — and Why PMs Should Care]

That article covers each step, from:

– memoryless LLMs,

– to tools with data access,

– to full AI agents with planning, memory, and tool usage.

👉 If you haven’t seen it yet — I highly recommend checking it out.

It’s packed with real examples, no fluff, and an easy-to-follow visual.

For now, here’s a quick snapshot to understand the spectrum 👇

🧠 RAG (Retrieval-Augmented Generation) is a method to connect your LLM to actual data.

It gives the agent not just “intelligence,” but access to relevant info — from your help center, CRM, wiki, you name it.

If you're building an AI assistant that needs to answer real product or policy questions — you probably need RAG.

Want to go deeper? Here's a hands-on guide to building your own RAG-based AI assistant:

📌 Guide: Build your first RAG-based assistantJust getting started with prompt writing?

📌 Prompt Engineering Guide (Google Cloud)

📌 OpenAI Cookbook Prompting Guide

🧩 Now let’s break down the real difference between an AI workflow and an AI agent — and why it matters more than you think.

AI Workflow or AI Agent — What Counts as “Real AI”?

You’ll often hear someone say they “built an agent” — but in reality, it’s just a two-step Make automation:

receive an email → save it to Notion.

Or the opposite: a product executes a complex multi-layered flow, but no one on the team calls it an agent — because “we just fine-tuned a model.”

🎯 Everything gets blurred. And yeah, that makes it harder to communicate —

whether you're working with your team, pitching to investors, or hacking through a weekend project.

Let’s clear it up. No fluff.

🧩 AI Workflow = Automation with a Brain

Think of it like your usual automation — but with a touch of intelligence.

Example: you build a Make scenario that:

• collects form submissions from your website,

• sends them to GPT for a short summary,

• and posts the output to Slack.

Smart? Sure.

But it’s still a linear pipeline. GPT is just one clever step in the flow — not an autonomous player.

📌 Tools: Make, Zapier, Pipedream, n8n + OpenAI API

📌 Your PM role: Spot opportunities to embed AI — to remove manual work or speed up decision-making.

🧠 AI Agent = Autonomous Behavior

Now compare that to an agent. You say:

“Process incoming requests, prioritize them, and post a summary to Slack — but only if the priority is over 7/10.”

What does the agent do?

• It analyzes the inputs,

• figures out which ones are worth acting on,

• decides what to send, and to whom,

• remembers who was already processed,

• and adapts the steps if something breaks.

It’s not just following instructions — it’s working toward a goal.

More like an assistant than a pipeline.

📌 Tools: n8n with decision logic, LangChain, CrewAI, AutoGen

📌 Your PM role: Define the behavior, constraints, and goals.

Not coding — designing what should happen and why.

🤔 So where’s the line?

That’s the tricky part — it’s all starting to blur:

– Some workflows in Make are almost agents already

– And some “agents” are just glorified workflows with fancy labels

So here’s a simple way to tell the difference:

👉 Workflow answers: "What to do and when?"

👉 Agent answers: "What am I trying to achieve — and how can I get there?"

📌 If you’re defining goals (not just steps) — you’re building an agent.

📍And yes — next we’ll look at what that actually means for you as a Product Manager:

How to design these systems, what real-world use cases look like, and where to even begin.

How a Product Manager Can Use LLMs, Workflows, and Agents in Real Work

So — you’ve figured out what counts as an agent and what’s just a clever workflow.

Now comes the real question:

How can this actually help in product work?

The answer depends on your team’s maturity, infrastructure, and use cases.

But here are three practical scenarios where AI tools actually work for the PM — not just entertain them in a browser:

📌 Scenario 1: Fast automation without devs

What you use: ChatGPT, Make, Zapier

What you automate: one-off tasks, quick wins, repetitive work

Examples:

• Enrich incoming leads with company info from the web

• Turn call transcripts into summaries and send to Notion

• Prompt-check your PRD for logical gaps

💡 PM Bonus:

You don’t need engineers. You don’t write code.

You just do it yourself.

Low barrier, visible results.

📌 Scenario 2: Custom AI tool for your team

What you use: Custom GPT, Copilot, Make + GPT API

What you automate: repeated requests, content generation, personalization

Examples:

• A Custom GPT that knows your company’s support processes

• An AI assistant that writes cold emails based on client profiles

• A generator that creates test cases from user stories in the backlog

💡 PM Bonus:

You build an actual “AI feature” — no backend required.

Speed is high, and the output quality depends on your prompts and internal knowledge base.

📌 Scenario 3: Designing logic for complex processes

What you use: n8n, LangChain, AutoGen, CrewAI

What you automate: tasks with logic, adaptation, memory

Examples:

• An agent processes support tickets: classifies, detects duplicates, routes to the right team

• It scrapes competitor data, aggregates it, and sends weekly insights

• An AI assistant reviews pull requests and comments when something breaks the rules

💡 PM Bonus:

You define goals, roles, and behavior.

You’re not coding — you’re architecting logic.

You might need a technical helper, but you are the conductor.

🛠 Alternative Use Cases: What This Looks Like in Real Life

If the above still feels abstract, here are three real examples of how AI is already helping in product work:

1. 🤯 “No one reads our Help Center — and Support is drowning in repeat questions”

The pain:

Support wastes hours answering the same questions.

You don’t want to pay for Zendesk AI or build a bloated chatbot.

The fix:

Build a Custom GPT trained on your FAQ, internal docs, and guidelines.

Embed it on your site with a chat widget.

Users ask questions, get answers — from a bot that knows your product.

Result:

• Up to 50% of repeat requests are handled without human support

• Support team is thankful for “a real assistant”

• Users are happier — they get precise answers, not just buttons and links

2. 📊 “We have data — but I still run to the analyst every time”

The pain:

You want to see key metrics (like Retention) fast, but SQL isn’t your thing, and dashboards are often outdated.

The fix:

Connect AI to your BI tool (e.g., ChatGPT + Metabase or Google Sheets API).

You type: “Show Retention for cohort X” — and get a chart.

Follow up with: “Compare to campaign Y.”

Result:

• Less manual work

• Closer to the data

• Faster, data-informed decisions

3. ⚙️ “Everything’s automated… but 80% still happens manually”

The pain:

You built a workflow in Make: lead → GPT summary → Slack.

Nice. But you still manually check relevance and remove duplicates.

The fix:

Add agent logic in n8n:

• Agent identifies high-value leads

• Checks for duplicates

• Tracks what’s been processed

• Sends notifications only when needed

Result:

• Less noise

• Less routine

• The team starts trusting automation

Wrapping up

AI tools for PMs aren’t about the future. They’re about the now. It’s not about a bot writing your backlog. And you don’t need to be an ML engineer.

But here’s what you do need:

• Know when AI solves a real problem — and when it’s just hype

• Break down agent behavior: what should happen, under what conditions, with what data

• Explain to your team why you’re building a system — not just writing another prompt

• Evaluate risks: privacy, answer quality, need for human review

🧩 And most importantly — don’t be afraid to experiment. The real entry barrier to AI today isn’t technical.

It’s product thinking.

💡 Want to go deeper? A couple more useful links:

Here's a curated list that covers the full GenAI landscape — from tools to best practices: 📌 Awesome Generative AI Guide

If you want to test different LLMs side by side — 📌 this tool lets you switch between GPT, Claude, and Gemini in one window. Great for testing and side projects. Just $10/month. (I’m not affiliated.)

Thanks for reading Atomic,

Stay in touch😉

— Dmytro